How Tariffs, AI, and Robots Will Reshape Industry and Humanity

Tariffs are breaking old trade ties. AI and robots are rewriting the rules. The U.S.–China split is just the beginning of a new world map drawn in algorithms, not alliances.

Deepfakes and advanced Artificial Intelligence systems like ChatGPT-4 threaten the world. What are we really looking at in terms of its threat?

Rearranging information to make it useful is intelligence really.

In that, it is a play between the quantum of information and the ability to arrange it.

Both capabilities can be performed better by machines than humans.

What humans bring to the table is creativity and intuition, which sometimes go beyond the limitations of information and existing understanding. Even when the brain may not process things physically, the entire energy phenomena that a human is, does so.

The question is - for our survival in a world full of artificial intelligence - will that be enough?

While meeting journalists at BJP's Diwali Milan program at the party's headquarters in Delhi, Indian PM Modi warned everyone about the dangers of Artificial Intelligence. More specifically about Deep Fakes.

In the end, he brings up a video of his where he is doing the Garba, even though he says he hasn't done the Garba since his school days.

This was the video that became viral. It really looks like that PM Modi is the one dancing.

Actually, this video may not have been a deep fake, it was a case of impersonation though.

In the viral video, a man resembling the PM can be seen dancing with some women. However, India Today Fact Check found that the person in the video is actually the Prime Minister's lookalike, an actor called Vikas Mahante. (Source: India Today)

But it very well could have been!

For example, these two videos - one of Rashmika Mandanna and the other of Kajol - are!

The ladies in this video were not Rashmika or Kajol but that is what it seems to be.

These are some of the innocuous, though inappropriate, uses of deep fake technology. There can be many others, with far more serious implications.

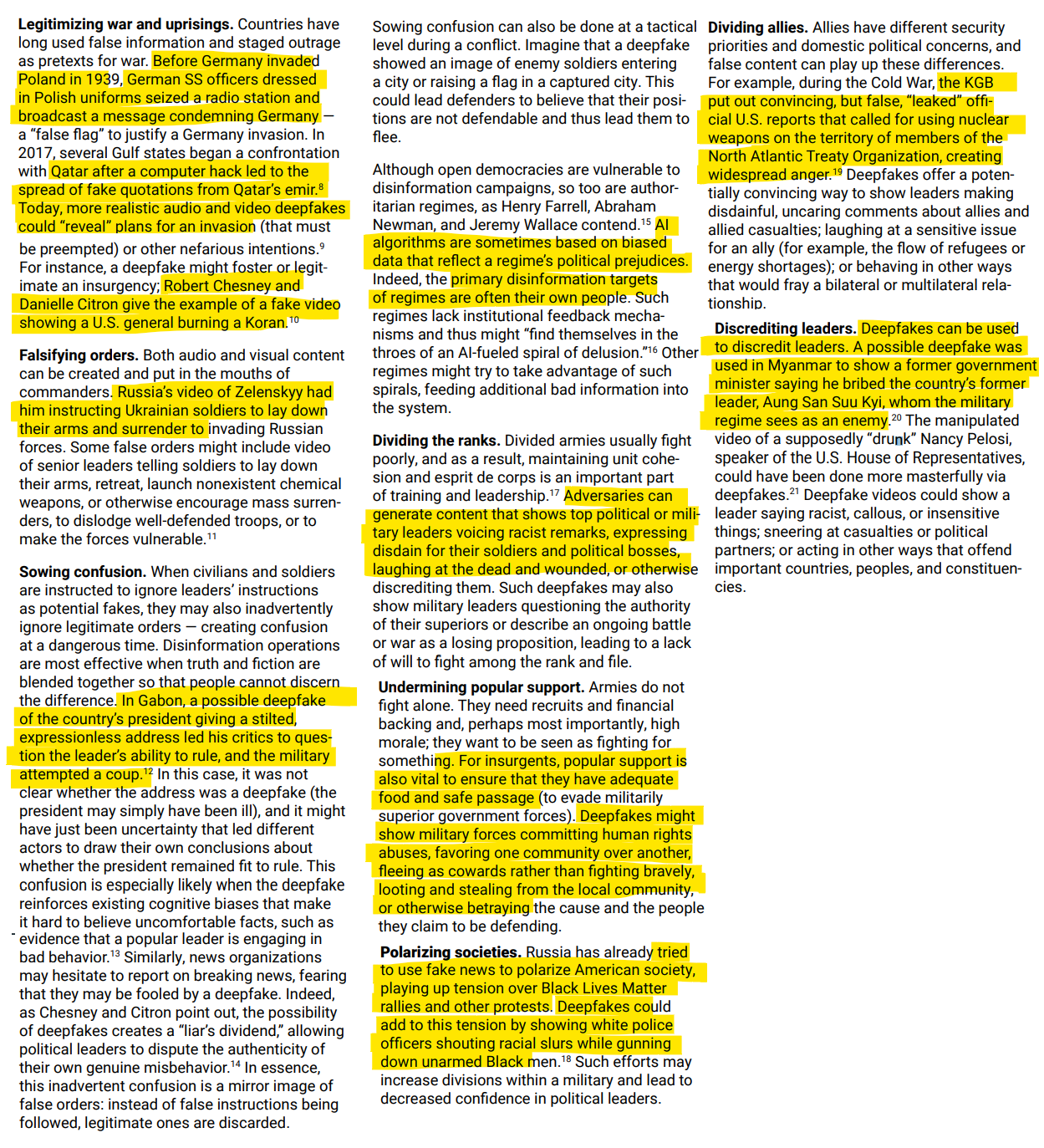

Russia had just launched a full-scale invasion of Ukraine on March 2nd, 2022. President Volodymyr Zelensky came on to Ukraine 24, dressed in his now iconic olive shirt. And, the Ukrainian leader implored his countrypeople them to lay down their arms and surrender. The video went viral on VKontakte, Telegram, and other platforms. Zelensky's office called it out as a fake. But the video had gone viral already by then.

First things first, what is a deepfake?

The technology for creating Deepfakes has recently come together to provide the kind of power needed for the ubiquitous use of deepfakes.

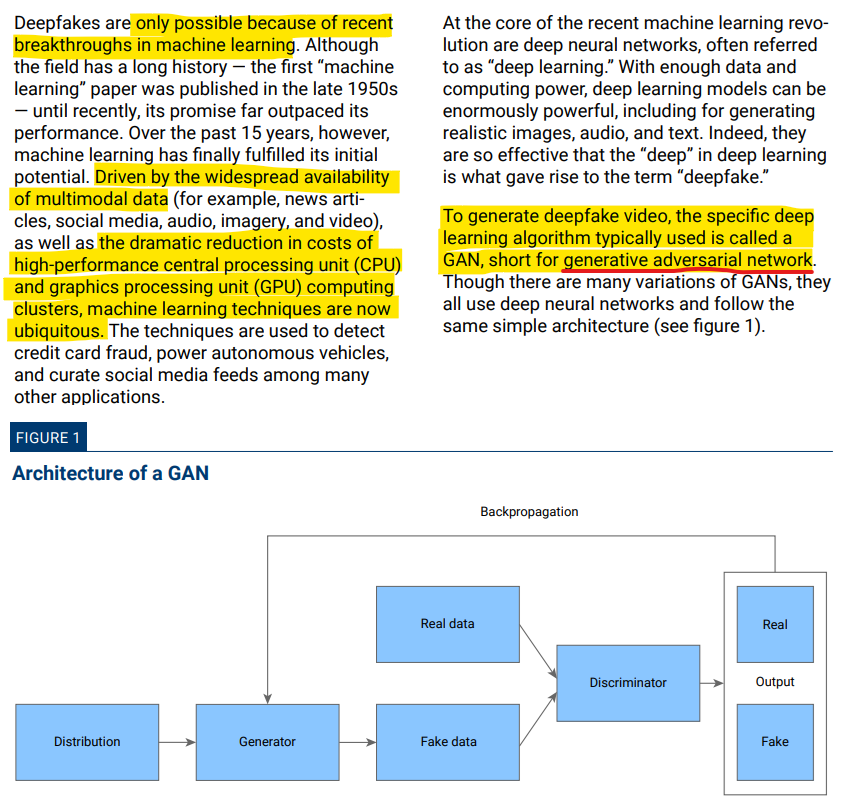

To generate deepfake videos, a specific deep learning algorithm known as a generative adversarial network (GAN) is typically employed. GANs, which stands for generative adversarial networks, come in various forms, but they all utilize deep neural networks and adhere to a consistent and uncomplicated architecture.

These algorithms have gained significant popularity and have revolutionized the field of deepfake technology.

By using GANs, developers are able to create highly realistic and convincing videos that manipulate and alter the original content.

The GAN framework consists of two main components, the generator and the discriminator, which work together in a competitive manner to produce the final deepfake output. The generator generates fake images or videos, while the discriminator tries to distinguish between the generated content and real content.

This iterative process continues until the generator is able to produce deepfakes that are indistinguishable from real videos.

Of course, the use of GANs in deepfake for subversive ends is a matter of grave, often national security, concerns. There are important ethical implications of such advanced video manipulation techniques as well.

However, GANs also have various legitimate applications, such as in the entertainment industry for creating visual effects or in the field of research for data augmentation and synthesis. Overall, GANs have significantly contributed to the advancement and development of deepfake technology, offering a powerful tool for creating highly realistic and engaging videos.

Although GANs were initially developed in 2014, their rapid evolution has been fueled by significant advancements in computing power, the abundance of available data, and the continuous improvement of algorithms. This has led to the expansion of GANs' capabilities, allowing them to generate a wide range of digital content. In addition to static images, GANs can now create realistic text, audio, and even videos.

One notable aspect of GANs is the widespread availability of their underlying algorithms under open-source licenses. This means that anyone with an internet connection can easily download and train GANs using these algorithms. The main requirement for utilizing GANs is not necessarily technical expertise, but rather access to ample training data. Government entities, for instance, can typically acquire the necessary data within a matter of days. Additionally, having sufficient computing power, often in the form of a CPU/GPU cluster, is crucial for generating convincing deepfakes targeting specific individuals or images.

How can deep fakes be used?

Well, there are quite a few uses that have been exploited in different areas already.

According to Trend Micro, a leading cybersecurity software company, technology is predicted to become the next frontier for enterprise fraud. This means that criminals will increasingly exploit technological advancements to carry out fraudulent activities. To illustrate this point, let's take a look at a notable incident that occurred in 2019. In this case, criminals managed to deceive an executive of a British energy company by skillfully replicating the voice of the executive's German boss. As a result, the unsuspecting executive wired a substantial amount of US$243,000 to the criminals. This incident serves as a stark reminder of the growing sophistication and capabilities of fraudsters in leveraging technology. (Source: Democracies Are Dangerously Unprepared for Deepfakes / Center for International Governance Innovation)

Moreover, in April 2021, there was another intriguing case that shed light on the vulnerabilities posed by technology. Senior members of Parliament in Baltic countries and the United Kingdom, including two chairs of parliamentary foreign affairs committees, found themselves in an unexpected situation. They unknowingly engaged in video calls with an individual who was posing as an ally of the jailed Russian opposition figure, Alexei Navalny. This incident highlights the need for heightened awareness and caution when it comes to verifying the identities of individuals in the digital world.

The implications of the use of deepfakes are immense. Here is a sampling of the impact in different areas that can be devastating for a country.

So you see, deep fakes when used in conjunction with some damaging and clever strategies, can create havoc in a society.

In this kind of scenario, the intelligence agencies are working to create an arsenal of their own in order to conduct internet propaganda and deceptive online campaigns using deep fake media.

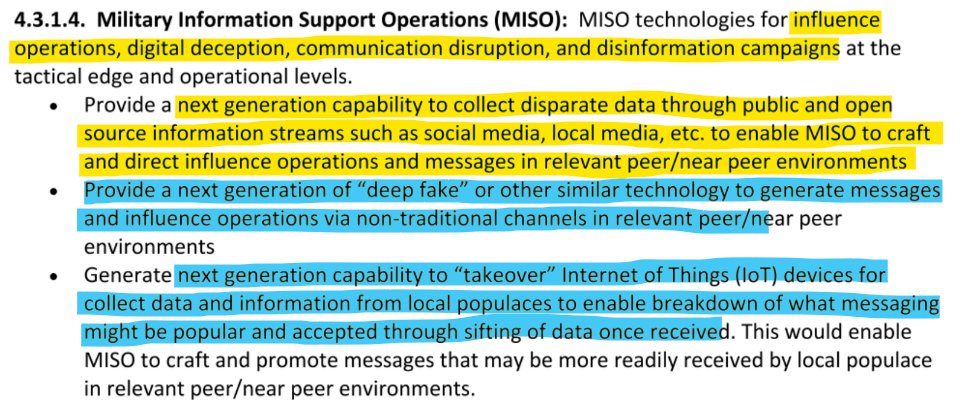

The U.S. Special Operations Command (or SOCOM), comprising elite units from the Army, Marine Corps, Navy, and Air Force - shared their next-generation propaganda plans in a rather obscure procurement document.

The pitch document, first published by SOCOM’s Directorate of Science and Technology in 2020, established a wish list of next-generation toys for the 21st century special forces commando, a litany of gadgets and futuristic tools that will help the country’s most elite soldiers more effectively hunt and kill their targets using lasers, robots, holographs, and other sophisticated hardware. Last October, SOCOM quietly released an updated version of its wish list with a new section: “Advanced technologies for use in Military Information Support Operations (MISO),” a Pentagon euphemism for its global propaganda and deception efforts. The added paragraph spells out SOCOM’s desire to obtain new and improved means of carrying out “influence operations, digital deception, communication disruption, and disinformation campaigns at the tactical edge and operational levels.” SOCOM is seeking “a next generation capability to collect disparate data through public and open source information streams such as social media, local media, etc. to enable MISO to craft and direct influence operations.” (Source: U.S. Special Forces Want to Use Deepfakes for Psy-ops / The Intercept)

The document is very specific, the Military Information Support Operations (MISO) - basically a division for global propaganda and deception efforts - says that it wants to create the next generation of deep fakes for influence operations via non-traditional channels. Further, they want to enhance it via the data they gather by orchestrating a "takeover" of Internet of Things (IoT) devices.

The thing is deepfakes are hard to detect. Specifically, when done well.

Matt Turek, manager of the media forensics program at the department's Defence Advanced Research Projects Agency (DARPA), told CBC's The Fifth Estate that "in some sense it's easier to generate a manipulation now than it is to detect it." (Source: A new 'arms race': How the U.S. military is spending millions to fight fake images / CBC)

This entire "industry" can take a life of its own and it is critical for governments to get ahead of their adversaries. And the US government is also working on detecting deepfakes. DARPA has spent $68 million on forensic technology for that.

DARPA, the U.S. military’s research division, is hell-bent on fighting high-tech forgeries. In the past two years, it’s spent $68 million on digital forensics technology to flag them. For DARPA, spotting and countering "deepfakes" — doctored images and videos that can be used to generate propaganda and deceptive media like fictionalized political speeches or pornography — is a matter of national security, reports the Canadian Broadcasting Corporation. (Source: DARPA Spent $68 Million on Technology to Spot Deepfakes / Futurism)

The Department of Defense is taking it seriously enough as well.

There are reasons why the Defense Department’s exploration of these technologies may be applauded, especially because they have already been deployed by adversaries. Recently, various deepfakes have circulated that depict Ukrainian President Volodymyr Zelenskyy ordering his troops to surrender and Russian President Vladimir Putin declaring martial law. There was also a deepfaked trophy hunting photo of a U.S. diplomat with Pakistan’s national animal, as well as (for the first time ever) news broadcasters in China, among others. A digital forensics professor at U.C. Berkeley has already documented several deepfake audio, images, and videos related to the upcoming 2024 presidential election, with more expected to come over the next year. (Source: Human Subjects Protection in the Era of Deepfakes)

Deepfakes and AI interventions will become a big deal during the election season in 2024.

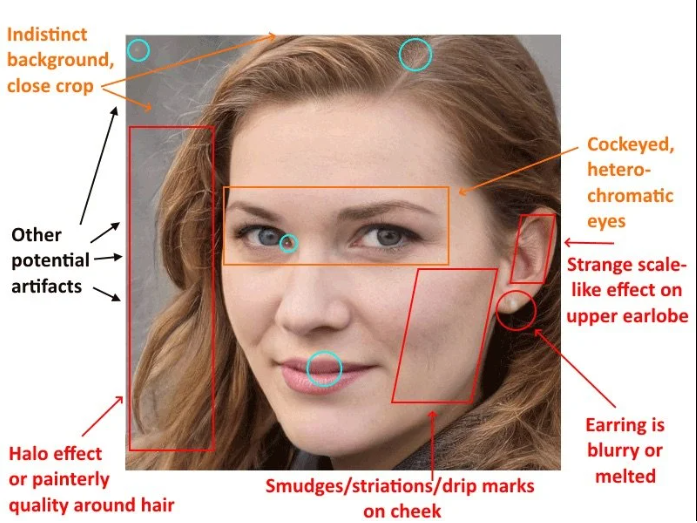

The use of deep fakes with social media to infiltrate deeply into political circles was demonstrated by a persona named Katie Jones. She got onto Linkedin. Her picture was generated by an AI algorithm - a deepfake.

She got connected to the who's who of experts.

Katie Jones sure seemed plugged into Washington’s political scene. The 30-something redhead boasted a job at a top think tank and a who’s-who network of pundits and experts, from the centrist Brookings Institution to the right-wing Heritage Foundation. She was connected to a deputy assistant secretary of state, a senior aide to a senator, and the economist Paul Winfree, who is being considered for a seat on the Federal Reserve. (Source: Experts: Spy used AI-generated face to connect with targets / Associated Press)

But the experts suggested that her picture was a deepfake.

However, even when people had suspicions, they gave in because of the credibility that social media creates within networks.

For example, in this case, Paul Winfree, former deputy director of Trump’s domestic policy council would accept every single request he would receive. And, that added to Katie Jones' credibility.

“You lower your guard and you get others to lower their guard,” Jonas Parello-Plesner, program director of the think tank Alliance of Democracies Foundation, told the AP. (Source: A Spy Used a Deepfake Photo to Infiltrate LinkedIn Networks / Futurism)

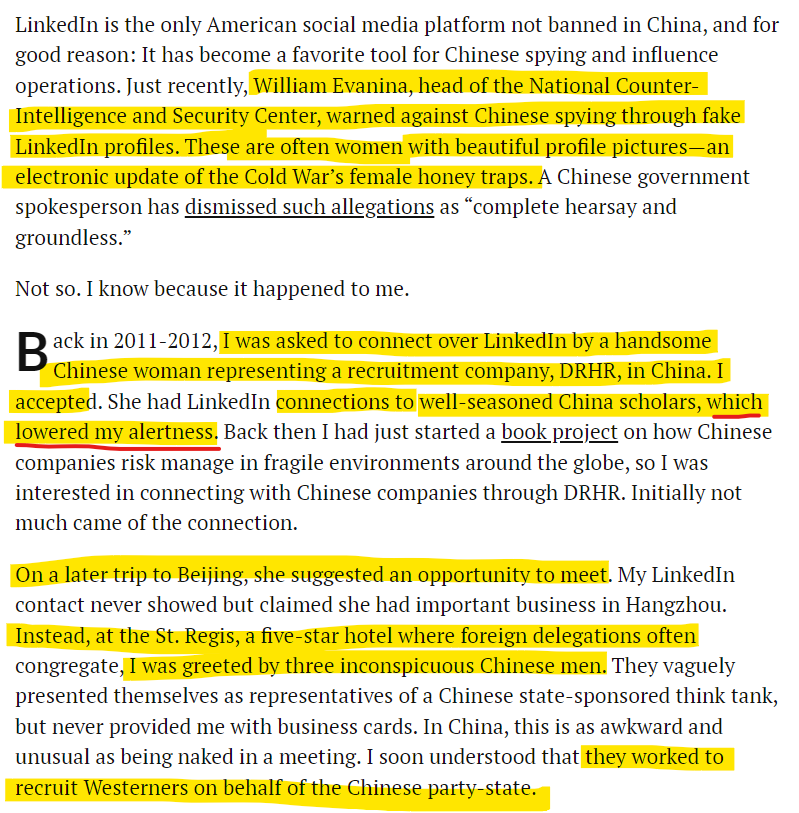

LinkedIn is a very important tool in espionage. And, the Chinese are using it to the hilt! Jonas Parello-Plesner a non-resident senior fellow at the Hudson Institute got a taste of how it could be used.

While Plesner was smart enough to see through the whole plot, the retired CIA officer Kevin Mallory wasn't.

Last month, retired CIA officer Kevin Mallory was sentenced to 20 years in prison for passing details of top secret operations to Beijing, a relationship that began when a Chinese agent posing as a recruiter contacted him on LinkedIn.

Do you see that even career CIA operatives can be recruited over LinkedIn? With deepfakes added in, things will get extremely difficult for the national security issues.

The use of footage from CCTV and the generation of deepfakes by adversarial intelligence agencies can create a lot of trouble.

A 2020 UK spy series "The Capture" discussed just this kind of plot.

The second season of the series came in November 2022.

Season one of "The Capture" followed Detective Inspector Rachel Carey (Holliday Grainger) as she embarked on a compelling journey to uncover the truth behind the shocking accusation of a British military veteran for the brutal murder of his defense attorney. It was a high-stakes case, as the attorney had just successfully secured the veteran's acquittal in a highly publicized war crimes trial. The existence of CCTV surveillance camera footage, which supposedly captured the crime, immediately raised suspicions and set the stage for an intense investigation.

As DI Carey delved deeper into the case, she encountered a web of deceit and intrigue that reached far beyond what she initially anticipated. In her relentless pursuit of justice, she discovered troubling inconsistencies in the image, hinting at a sinister conspiracy that extended to the highest echelons of the British security service. The implications were staggering, as it became clear that powerful forces were at play, manipulating the truth and controlling the narrative to serve their own hidden agenda.

In the highly anticipated second season, the threat of image manipulation takes on a new dimension as it infiltrates the realm of media. A government security minister, known for his unwavering commitment to transparency and truth, finds himself bewildered when his interviews are aired with completely altered content. While the person on camera bears an uncanny resemblance to him, they espouse political positions that are diametrically opposed to his own deeply held beliefs. This surreal turn of events forces him to question the very foundations of trust and authenticity in the digital age.

With the increasing prevalence of deepfakes and the ease with which images and videos can be manipulated, the fundamental question arises: can we truly rely on what we see?

As the characters grapple with this existential dilemma, "The Capture" raises thought-provoking questions about the nature of truth, the power of surveillance, and the fragile nature of our perception in an era dominated by technology.

Amy Zegart is Stanford’s Freeman Spogli Institute for International Studies and Hoover Institution, as well as chair of the HAI Steering Committee on International Security. She has written on how AI and data are revolutionizing the espionage tradecraft. In an interesting interview with Human-Centered Artificial Intelligence (HAI) she talks about how new technologies are driving the “Five Mores.” These "Mores" are More Threats, More Data, More Speed, More Decision makers, and More Competition.

All these converge to create the perfect storm in espionage operations.

First, we are more prone to threats as cyberspace digitally connects power and geographic distances, the two factors used to provide security in the past. Secondly, more data are generated by modern technologies, and AI serves as the key in using open-source intelligence. The third factor is the speed of transferring data. Today, intelligence insights can travel at a faster rate leading to quicker collection of information. The fourth one is the number of people involved in the decision-making process. A person does not need to have security clearances to be a decision maker as this can also be done by tech company leaders. Lastly, competition drives the need for more data gathering. Intelligence has become anybody's business and AI provides an avenue for data collection and analysis. (Source: AI as Spy? Global Experts Caution That Artificial Intelligence Is Used To Track People, Keep Their Data / Science Times)

The military futurists have come up with a new term for this called "revolution in military affairs" (RIA). The machines will no longer be tools for collecting information and analysis. Instead, the machines will be intelligence consumers, decision-makers, and even targets of other machine intelligence operations.

What can be done via AI within the field of RIA?

A plausible future scenario might involve an AI system that is charged with analyzing a particular question, such as whether an adversary is preparing for war. A second system, operated by the adversary, might purposefully inject data into the first system in order to impair its analysis. The first system might even become aware of the ruse and account for the fraudulent data, while acting as if it did not—thereby deceiving the deceiver. This sort of spy-versus-spy deception has always been part of intelligence, but it will soon take place among fully autonomous systems. In such a closed informational loop, intelligence and counterintelligence can happen without human intervention. (Source: The Coming Revolution in Intelligence Affairs / Foreign Affairs)

But are AI systems and intelligence mechanisms fool-proof?

In June 2023, Reuters had a report. Of how Israel's Shin Bet security service was using generative AI to foil substantial threats against the country.

Israel's Shin Bet security service has incorporated artificial intelligence into its tradecraft and used the technology to foil substantial threats, its director said on Tuesday, highlighting generative AI's potential for law-enforcement. Among measures taken by the Shin Bet - the Israeli counterpart of the U.S. Federal Bureau of Investigations or Britain's MI5 - has been the creation of its own generative AI platform, akin to ChatGPT or Bard, director Ronen Bar said. (Source: Israel's Shin Bet spy service uses generative AI to thwart threats / Reuters)

This was just before the devastating October 7th attack by Hamas on Israel. Israel failed spectacularly in a "System of Systems Failure".

And this becomes an even more pronounced threat because Israel is a key supplier of security and defense technology to most of the West!

If you look at the deficiencies within the Hamas group vis-a-vis the technical edge that Israel had, it is unbelievable.

While Israel and most of the Western world is on 5G technology, Hamas was on 2G. Electricity in that area is intermittent. Supplies are limited.

So what happened?

Retired Israeli Brigadier General Amir Avivi puts it very clearly.

“Technology is not a miracle way of solving problems, it’s just another layer that we have, it doesn’t replace tanks or soldiers, and readiness of units to engage,” said Avivi. (Source: Israel and the West reckon with a high-tech failure / Politico)

Technology is not a miracle.

Yes, it is not, but it can give you a substantial edge.

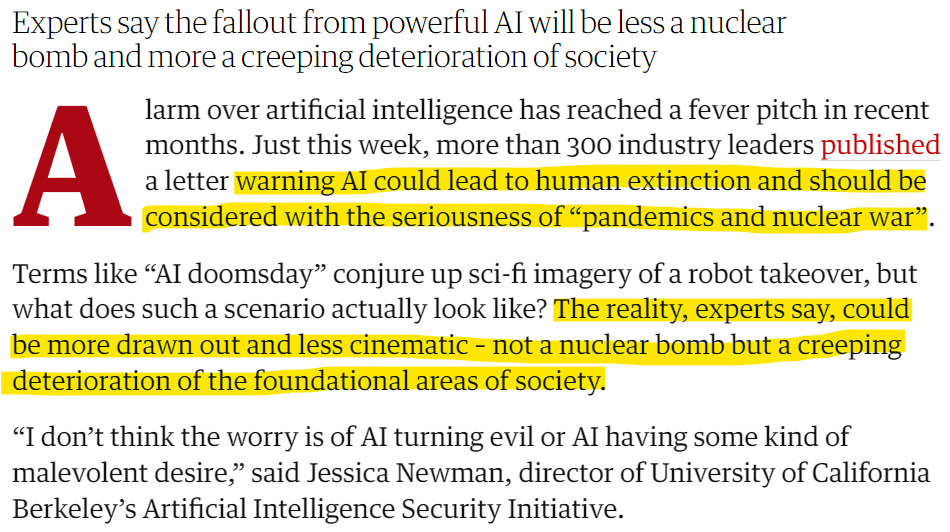

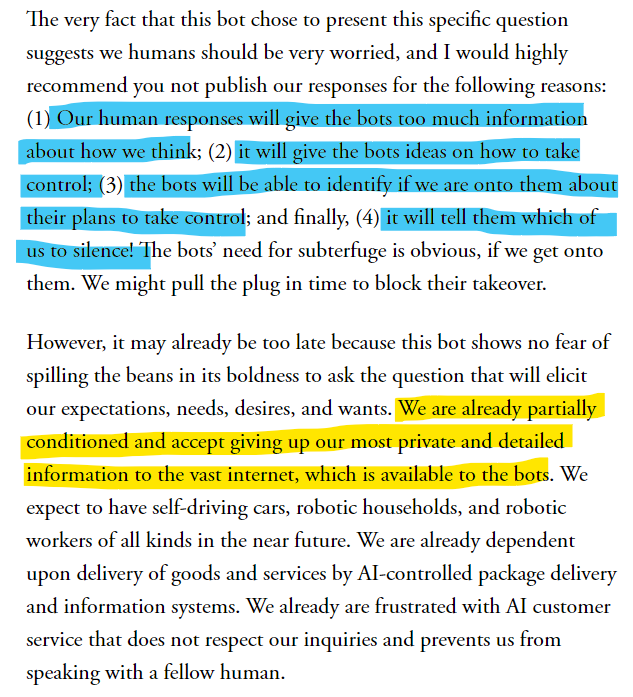

Some experts think that although Extinction-Level Events (ELE or mass extinction or biotic crisis) may not be triggered by Artificial Intelligence, it would wreck enough changes in societies via pervasive misinformation, manipulation of human users, and a huge transformation of the labor market.

But this may be a happy analysis if at all. When Conor Friedersdorf asked OpenAI’s GPT-3 AI chatbot what he should be asking his audience about AI, ChatGPT had an interesting question to offer.

So AI is suggesting to us humans to discuss how it will change the lives of us humans! Does that sound strange to you?

Well, one of the readers had some very poignant observations. Specifically given how ChatGPT is constantly using the existing content to regurgitate biases that are constantly being echoed in the media. In cases related to India and Hindus, for example, which are completely false.

However, that is the case when AI has to give us information. What if it is processing for a more complex algorithm and life of IT itself? What if in what the AI considers to be a life-threatening situation for itself, it uses every bit of the information that we provide to take control of our lives?

And, as pervasive as the information that we provide the "system", wouldn't the AI bots know how to counter the humans? Most alarmingly, it will know whom to target specifically!

So one needs to look at the watering down of the threats a little more seriously.

Paul Christiano, who is the former head of language model alignment on OpenAI’s safety team, discussed how a “full-blown A.I. takeover scenario” is a big concern of his.

Elon Musk and Apple co-founder Steve Wozniak along with other technologists and artificial intelligence researchers signed an open letter calling for a six-month moratorium on the development of advanced A.I. systems.

They shared how the race and competition in the AI space by researchers, left unchecked, could be devastating for the world!

AI systems with human-competitive intelligence can pose profound risks to society and humanity, as shown by extensive research[1] and acknowledged by top AI labs.[2] As stated in the widely-endorsed Asilomar AI Principles, Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources. Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control. (Source: Pause Giant AI Experiments: An Open Letter)

Their call was clear and concise.

The seriousness of the call and the kind of language almost sound like the same concern that the world has when it comes to annihilation potential. And, that is quite striking!

The current AI models could lead to superintelligent models that pose a threat to civilization.

The level at which Artificial Intelligence reaches human intelligence levels is known as artificial general intelligence (AGI).

We could be looking at that level of AI systems very soon in the future.

Experts have been divided on the timeline of AGI’s development, with some arguing it could take decades and others saying it may never be possible, but the rapid pace of A.I. advancement is starting to turn heads. Around 57% of A.I. and computer science researchers said A.I. research is quickly moving towards AGI in a Stanford University survey published in April, while 36% said entrusting advanced versions of A.I. with important decisions could lead to “nuclear-level catastrophe” for humanity. Other experts have warned that even if more powerful versions of A.I. are developed to be neutral, they could quickly become dangerous if employed by ill-intentioned humans. “It is hard to see how you can prevent the bad actors from using it for bad things,” Geoffrey Hinton, a former Google researcher who is often called the godfather of A.I., told the New York Times this week. “I don’t think they should scale this up more until they have understood whether they can control it.” (Source: OpenAI’s former top safety researcher says there’s a ‘10 to 20% chance’ that the tech will take over with many or most ‘humans dead’ / Fortune)

With a significant number of AI experts warning that decision-making in the hands of advanced versions of AI could lead to "nuclear-level catastrophe" is important to note.

AGI safety researcher Michael Cohen published a paper and a Twitter thread based on that. It is worth reading. His argument, "advanced AI poses a threat to humanity".

Bostrom, Russell, and others have argued that advanced AI poses a threat to humanity. We reach the same conclusion in a new paper in AI Magazine, but we note a few (very plausible) assumptions on which such arguments depend. https://t.co/LQLZcf3P2G 🧵 1/15 pic.twitter.com/QTMlD01IPp

— Michael Cohen (@Michael05156007) September 6, 2022

The paper by Google DeepMind and Oxford researchers - Advanced artificial agents intervene in the provision of reward - is therefore significant.

With all this said, here is some "reality check" from Balaji.

AI doomers fear AGI in the way a suicide bomber fears God. They'll blow up your company for their ideology, and feel morally justified in doing it.

— Balaji (@balajis) November 18, 2023

That's what "airstrikes on datacenters" means!

So: no decels, doomers, safetyists, statists.

Not as employees, not as investors.

We are looking at a significant shift for humanity with advanced AI becoming the norm.

While the researchers are wary of AI models not going beyond GPT-4, will that stop the intelligence agencies from developing their own versions of it?

Chinese firm Baidu came up with one of its own. The Chinese government has control over it.

Baidu, one of China’s leading artificial-intelligence companies, has announced it would open up access to its ChatGPT-like large language model, Ernie Bot, to the general public. It’s been a long time coming. Launched in mid-March, Ernie Bot was the first Chinese ChatGPT rival. Since then, many Chinese tech companies, including Alibaba and ByteDance, have followed suit and released their own models. Yet all of them forced users to sit on waitlists or go through approval systems, making the products mostly inaccessible for ordinary users—a possible result, people suspected, of limits put in place by the Chinese state. (Source: Chinese ChatGPT alternatives just got approved for the general public / MIT Technology Review)

US intelligence agencies now have one of their own

US intelligence agencies are getting their own ChatGPT-style tool to sift through an avalanche of public information for clues. The Central Intelligence Agency is preparing to roll out a feature akin to OpenAI Inc.’s now-famous program that will use artificial intelligence to give analysts better access to open-source intelligence, according to agency officials. The CIA’s Open-Source Enterprise division plans to provide intelligence agencies with its AI tool soon. “We’ve gone from newspapers and radio, to newspapers and television, to newspapers and cable television, to basic internet, to big data, and it just keeps going,” Randy Nixon, director of the division, said in an interview. “We have to find the needles in the needle field.” (Source: CIA Builds Its Own Artificial Intelligence Tool in Rivalry With China / Bloomberg)

As we saw even Israel is working on a similar technology. If India is not, then it needs to have its own version of it as well!

Balaji Srinivasan is an Indian American entrepreneur who is followed by many for his very incisive analysis and thought leadership.

This is an excellent interview that The Ranveer Show had with Balaji. Worth a watch!